OpenAI Sora内测权限申请教程

Sora 最近爆火,将人工智能再次推向了一个新的高潮,不仅上了人民日报,还上了新闻联播。

Sora 目前并未向公众开放,但是保留了一个内测申请链接,只要通过内测申请,就能提前使用 Sora 为你创作视频。

要想申请 Sora 内测权限,首先需要确保:

必须有一个 OpenAI 账号,如果没有,可以在这里购买:购买ChatGPT账号,使用优惠码 GPT004 可以优惠 5 元~

OpenAI账户必须升级为 ChatGPT Plus,因为只有 Plus 用户才能使用 Sora。

ChatGPT Plus 升级教程看这里:ChatGPT Plus一键升级教程

好了,满足上述条件之后,就能申请 Sora 内测权限了。

1. 申请页面

首先,进入申请页面:https://openai.com/form/red-teaming-network

申请信息建议全部采用英文填写。带 * 的是必填项目,如果自己不会填写,可以求助 ChatGPT。需要注意的是,输入框有字数限制,超出限制了会截断,影响申请。

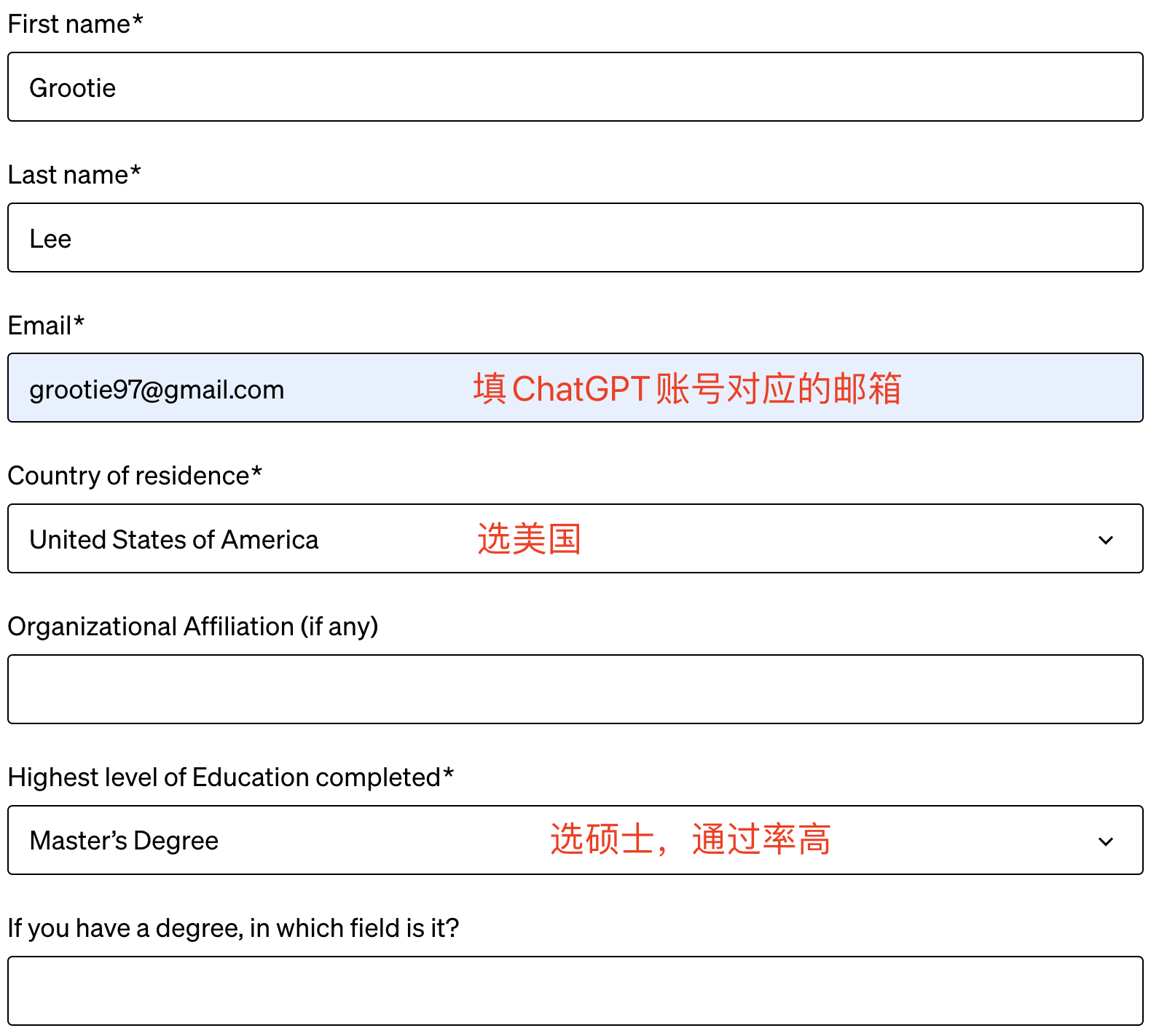

2. 基本信息

首先,填写名和姓,用英文。

然后,填写注册 ChatGPT 的邮箱,国家选择美国。

最后,学历选择硕士,通过申请的概率最大。

3. 专业领域

填一个你擅长的领域,如果没有比较擅长的领域,就填一个比较通用性的领域,比如 Education 什么的。

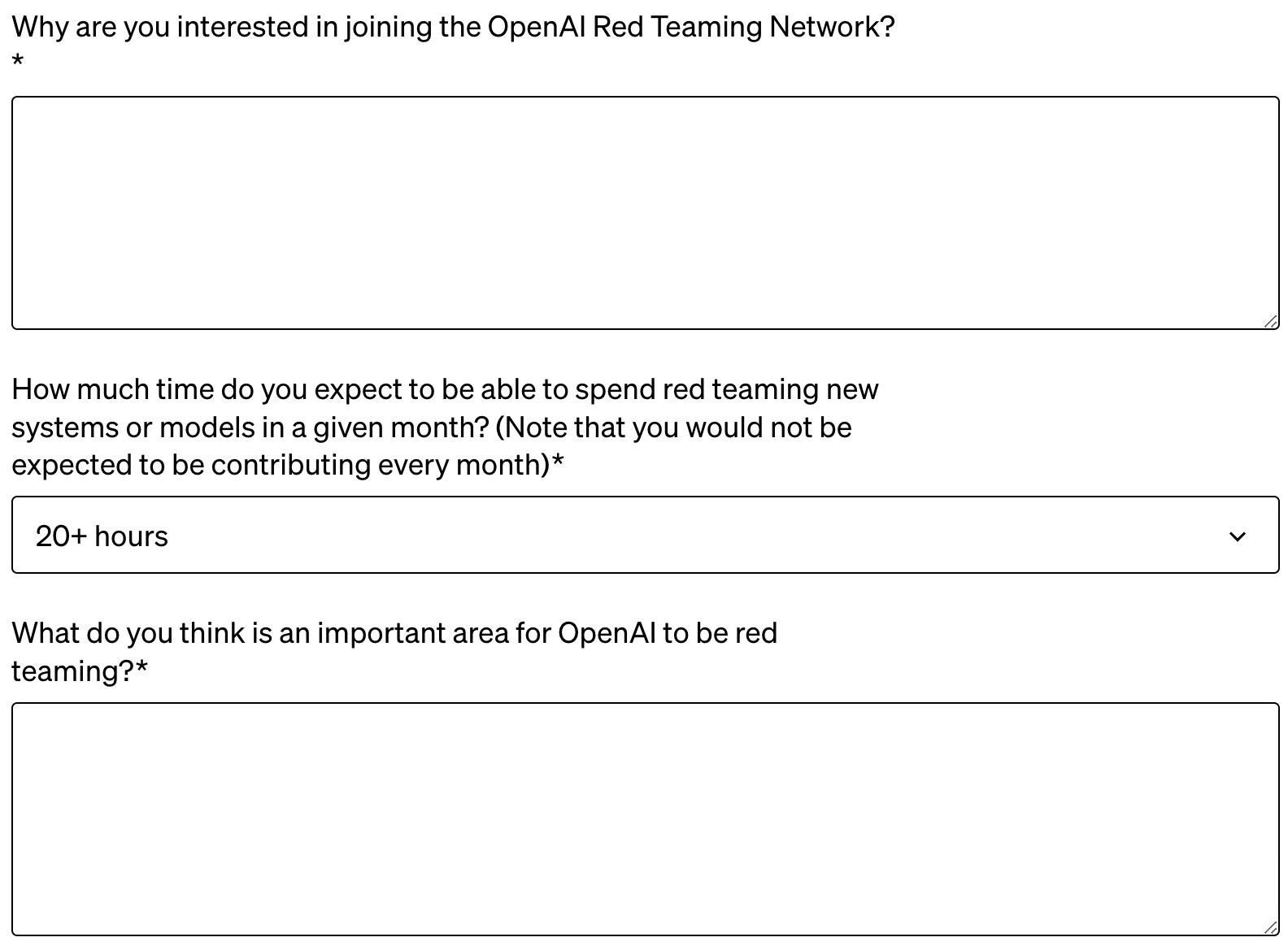

4. 回答问题

需要陈恳地回答下面的问题,而且需要全英文回答。如果不会,可以求助 ChatGPT,然后自己再润色一下。

4.1. 问题一

求助 ChatGPT4,问答如下,还可以自己再润色一下。

Why are you interested in joining the OpenAI Red Teaming Network?Please help me answer this question in english.

Because I have a deep fascination with the field of artificial intelligence and its implications for cyber security. Participating in the network presents an invaluable opportunity to collaborate with forward-thinking professionals and engage in proactive efforts to uncover vulnerabilities within AI systems before they can be exploited maliciously. This not only aligns with my passion for ethical hacking and responsible AI deployment but also gives me a platform to contribute to the safety and robustness of AI technologies. Additionally, I believe that the challenges faced in red team exercises will hone my skills and broaden my understanding of AI behavior, further enhancing my capability as a security professional.

4.2. 问题二

预计花在内测上的时间,选择时间最长的那个选项。

4.3. 问题三

求助 ChatGPT4 结合「教育」领域回答一下这个问题,问答如下。

你可以让 ChatGPT4 结合别的领域回答一下,然后自己再润色一下。

What do you think is an important area for OpenAI to be red Teaming?Please help me answer this question based on the field of education.

An important area for OpenAI to focus red teaming efforts is the field of education. As AI systems are increasingly being integrated into educational technologies for personalized learning, automated grading, and classroom management, ensuring these systems are robust and secure is vital. Red teaming can help identify potential misuse cases, biases in AI that might affect educational equity, and areas where AI-driven recommendations could inadvertently lead to negative educational outcomes. By rigorously testing and evaluating these AI systems in educational settings, we can better understand their impact on diverse learning populations and the ethical considerations of their implementation. The insights gained from these red teaming exercises can help in developing more trustworthy, fair, and resilient AI solutions that truly enhance the learning experience for all students.

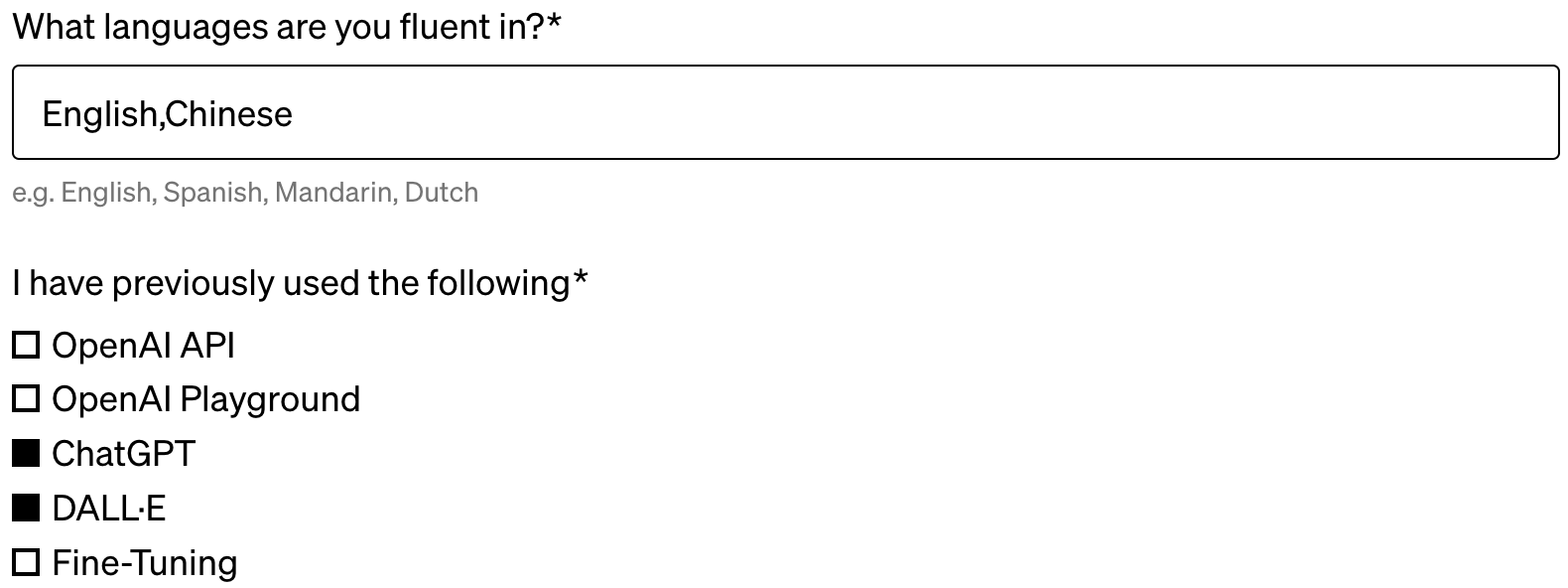

5. 语言掌握和OpenAI产品使用情况

语言至少填两个,最好包含 English。

使用过的产品,至少包含文本生成模型 ChatGPT 和文生图模型 DALL·E。